Challenges and Emerging Topics in Explainable AI

8 January 2024

Ekkehard Schnoor, Researcher at Fraunhofer Heinrich Hertz Institute

Our previous article has introduced basic notions of explainable artificial intelligence (XAI) in the context of natural disaster management (Please see "Explainable Artificial Intelligence for Natural Disaster Management“, published on the 21st of July 2023). Let us recall that XAI methods are of particular interest in the context of deep learning, which are powerful highly non-linear machine learning models.

Deep neural networks have shown great empirical success in the last years, but their inner working often remained elusive. XAI methods aim to open up the black box of such opaque models at the heart of many modern machine learning and artificial intelligence applications.

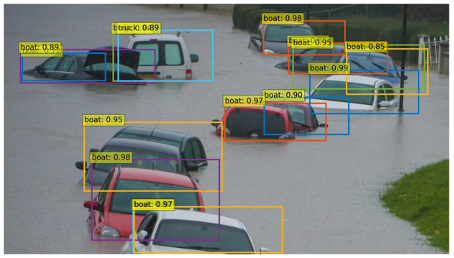

Traditional post-hoc attribution methods, such as Layer-wise Relevance Propagation (LRP) [1], are techniques aim to compute so-called heatmaps to assign scores to the input features quantifying their importance for the prediction. This has also led to the discovery of so-called "Clever Hans predictors" [2], undesired decision strategies based on spurious correlations in the data. Such examples also arise in the context of natural disasters such as floods. In such extreme events, cars surrounded by water may be misclassified as boats. This is due to the fact that training datasets consisting of natural images will typically show cars in a street environment and boats floating in water, making a generalisation to extreme, unlikely events hard.

Figure with predictions provided by Nikos Militsis (AUTH), altered from the original. Available at : https://m.atcdn.co.uk/ect/media/f2e2c5a3883f44f5b31d62fcc2e3b00b.jpg

Concept-based explanation techniques such as Concept Relevance Propagation (CRP) aim to go beyond simple saliency maps at the input level to obtain explanations in terms of human-understandable concepts. This leads to explanations which are easier to understand for human users, and which may help to reveal hidden context bias [3,4].

XAI also plays a crucial role in the European AI Act, a legislation originally proposed by the European Commission in 2021 that has been adopted recently. This globally unique AI regulation distinguishes between different risk levels for applications of AI. The legislation emphasizes the importance of transparency and accountability in AI systems, and XAI is seen as a mean to achieve these goals. XAI techniques enable AI systems to provide understandable explanations for their decisions and actions, allowing users and stakeholders to comprehend the reasoning behind AI-generated outcomes. Nevertheless, (legal) accountability in high-risk AI applications remains a complex issue.

While originally XAI research has strongly focused on image classification using deep learning (typically feedforward/convolutional neural networks), novel research directions are emerging.

This includes new architectures such as Transformers [5], using attention mechanisms that are employed to capture relationships in sequences. While stemming from the field of natural language processing (NLP) where they revolutionized tasks such as machine translation, they have also been adapted for computer vision tasks (vision transformers). Interesting from an XAI perspective are also other ways of learning such as Reinforcement Learning (RL), which models learning from experience. Here, an autonomous agent interacts with the environment and aims to maximize its cumulative rewards. Compared to classification tasks, this poses several additional challenges [6]. Explainable Reinforcement Learning (XRL) is an approach in machine learning that focuses on making the decision-making process of reinforcement learning algorithms more understandable and interpretable. Other interesting domains under investigation include time series data, including audio signals [7], complementing the research in the vision domain.

References

[1] Bach, Sebastian, et al. "On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation." PloS one 10.7 (2015): e0130140.

[2] Lapuschkin, Sebastian, et al. "Unmasking Clever Hans predictors and assessing what machines really learn", Nature Communications 10, 2019.

[3] Achtibat, Reduan, et al. "From attribution maps to human-understandable explanations through Concept Relevance Propagation." Nature Machine Intelligence 5.9 (2023): 1006-1019.

[4] Dreyer, Maximilian, et al. "Revealing Hidden Context Bias in Segmentation and Object Detection through Concept-specific Explanations." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023.

[5] Vaswani, Ashish et. al. "Attention Is All You Need", NeurIPS, 2017.

[6] Milani, Stephanie, et al. "A survey of explainable reinforcement learning." arXiv preprint arXiv:2202.08434(2022).

[7] Vielhaben, Johanna, et al. "Explainable AI for Time Series via Virtual Inspection Layers." arXiv preprint arXiv:2303.06365 (2023).