Enhancing Disaster Image Datasets with Diffusion Models and Attention Maps

10 June 2025

Eleonor Díaz, Computer Vision Engineer at Atos Spain

In the field of disaster response and environmental monitoring, obtaining high-quality, labelled imagery of natural disasters—such as wildfires and floods—remains a significant challenge. The unpredictable and dangerous nature of these events often prevents the collection of comprehensive visual data, resulting in a severe data scarcity. This lack of representative examples limits the effectiveness of the training of AI models on tasks such as detection, segmentation, and planning in emergency situations.

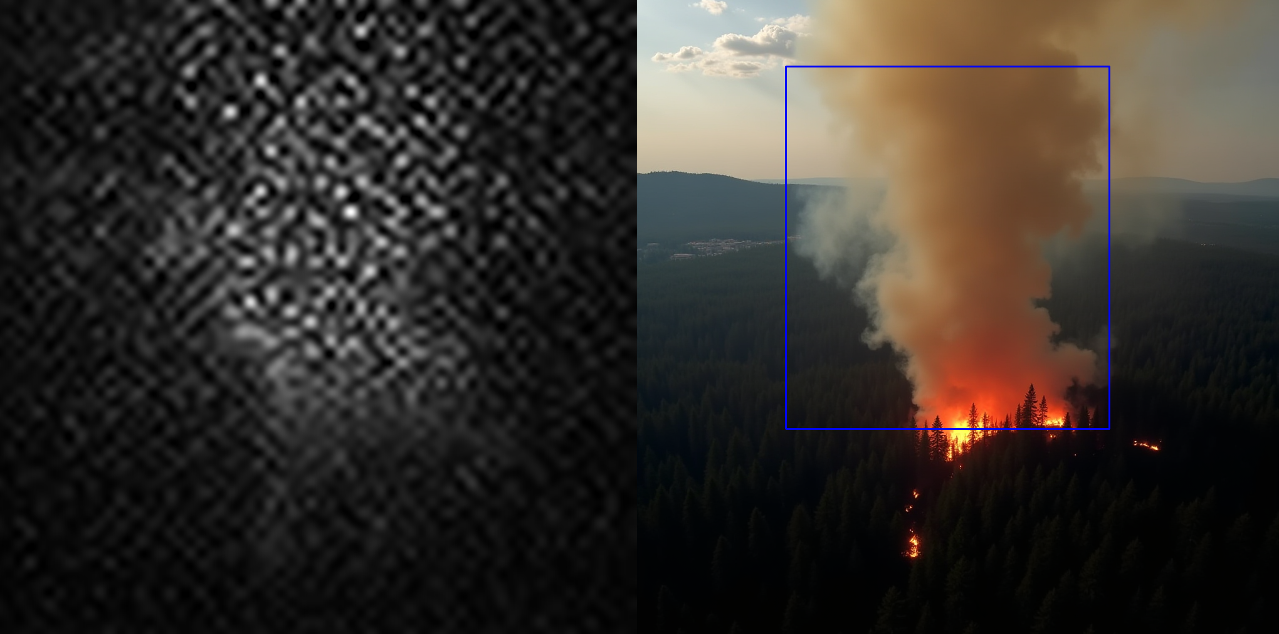

To address this issue, diffusion models are used to generate realistic synthetic images of natural disasters. These models are capable of producing diverse and high-fidelity scenes based on simple text prompts like "flooded neighbourhood" or "forest fire with smoke". This enables the creation of data that would otherwise be rare, dangerous, or costly to capture, improving model robustness.

To further enhance the usefulness of these synthetic images, some techniques are integrated to extract attention maps corresponding to specific keywords in the prompt (e.g., fire, smoke, water). These maps highlight the areas of the image where the model focuses most while generating a particular concept, effectively localising and segmenting semantic features during the diffusion process. The resulting attention maps can then be used as weak labels or segmentation masks, adding significant value for training and evaluation purposes.

To refine these maps for practical use, a post-processing step that isolates the most relevant visual regions is applied. This involves converting the attention map to greyscale and identifying the brightest areas, which indicate the strongest model attention. Using a clustering algorithm (DBSCAN), nearby bright pixels are grouped together—typically representing coherent visual elements such as a plume of smoke or a burning area. From these clusters, the one with the highest average intensity is selected and a clean binary mask is generated. This mask shows precisely where the model concentrated when interpreting a given concept and can be used both for segmentation tasks and model interpretability.

In summary, combining diffusion-based image generation with attention-guided token segmentation offers a powerful approach to overcoming the limitations of scarce visual disaster data. It enables the creation of synthetic datasets with corresponding ground truth masks, facilitating better training, analysis, and deployment of AI systems in disaster scenarios.